Introduction

Welcome to this first post in my series on running R workloads in AWS. Today, we’ll explore how to use Docker to package R models effectively, providing a flexible and efficient solution for cloud migration. By the end of this post, you’ll understand:

- Why Docker is an excellent choice for R workloads in AWS

- How to implement a layered Docker architecture for R applications

- Best practices for Dockerizing R workloads

If you need a refresher on Docker, check out my LinkedIn Article for background information.

Why Docker for R Workloads?

When migrating developed solutions to the cloud, we need a compute runtime that’s both flexible and efficient. Docker is the ideal choice for several reasons:

- Portability: Docker images can be easily moved between different AWS services, such as Lambda and ECS.

- Consistency: Docker ensures that your R environment remains consistent across development, testing, and production.

- Scalability: Containerized R workloads can be easily scaled in cloud environments.

Docker layered architecture

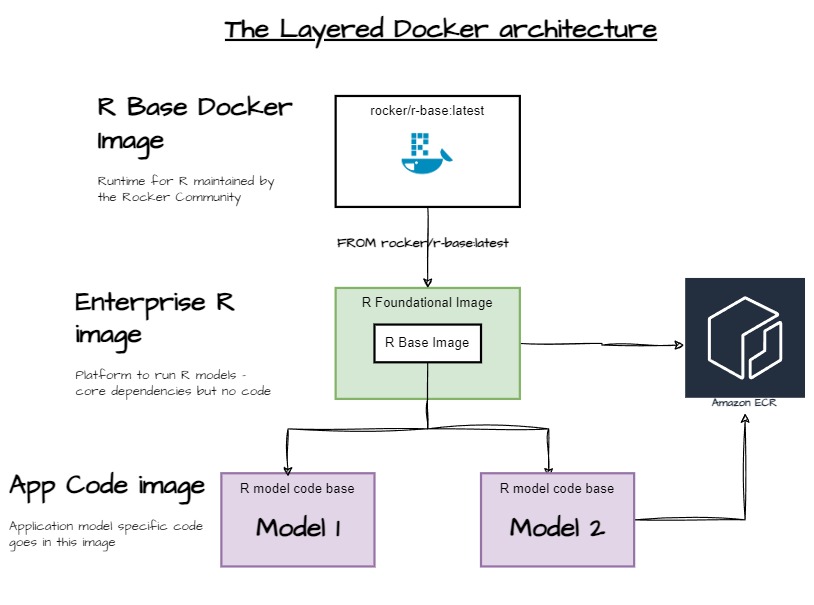

Docker images are lightweight VMs with specific dependencies that are frozen in time into an image. We create layers of images that stack on top of each other. Why? Layering allows for separation of concern. The R Base Docker image is maintained by The Rocker R Community, allowing for reuse by building layers that inherit from the base image.

Dependencies don’t change but your code will. The diagram below shows the architecture of the layers I used in the solution. ECR is the AWS Elastic Container Registry, which is where the Docker images get stored in your AWS Account. We will cover this more when we get into automating builds with Github actions and ECS Fargate later in this series.

Why not just put everything in one image and call it a day? This is possible, but there are some key benefits of using this layered architecture:

- Build time - When I first started this project I put everything in the middle layer above. I observed that every time there was a code change, the build would take almost 90 mins. This is because the Docker image had to re-download all the dependencies, install them, and then run the code. Once I separated the app code model image, and inherited the Enterprise R image, dependencies were already present and installed, saving precious time on each build.

- Reusability - As seen in the diagram, by creating a reusable Enterprise R image, I could share amongst any new models that get created - using common standard for dependencies and packages.

- Easier maintenance - The Enterprise R image rarely changed, unless one of the data scientists needed a new package or there was a new dependency. I was able to test any changes in this independent Base image without any impact to other areas of the system

Building our Docker image for R workloads

Below is a sample Dockerfile for the Enterprise R image. This is the middle layer in the diagram above.

# Use the official R base image maintained by The Rocker Community

FROM rocker/r-base:latest

# Install system dependencies

RUN apt-get update && apt-get install -y \

libcurl4-openssl-dev \

libssl-dev \

libxml2-dev

# Install dependant R packages

RUN apt-get install -y libfontconfig1-dev libharfbuzz-dev libfribidi-dev libfreetype6-dev libpng-dev libtiff5-dev libjpeg-dev

# Script that installs all the R packages

RUN Rscript /code/install_packages.R

# This image now has everything to run the application specific models!

For the model-specific image, we can build on the Enterprise R based image:

FROM <<Account ID>>>.dkr.ecr.us-east-1.amazonaws.com/rbase:update_12312023

## create directories

RUN mkdir -p /code

# Set the working directory

WORKDIR /code

## copy files

COPY *.R /code

# Ron the rscript executable with the name of the model as a parameter

CMD ["Rscript", "/My.model.run.R"]

As seen here, the Dockerfile starts with the FROM keyword that pulls the Enterprise R base image from ECR with the specific tag of update_12312023

R scripts are interpreted using an R interpreter called an Rscript. This tool allows you to run R scripts from the command line, making it suitable for scripting and automation tasks. The model starts by running the Rscript command and starting the mode in the last line of the Dockerfile.

These Dockerfiles need to be built into an image. You can do this locally on your machine or have your CICD tool automate that process and push to a Docker repository like AWS ECR. We will cover that process later in this series. The Docker CLI is a tool that gets installed on your environment and enables you to run Docker commands. Collabnix has a very helpful

Docker cheatsheet that covers the basic commands and more.

Best practices for Dockerizing applications

Achieving a Dockerized platform for your codebase provides incredible flexibility to running the application in a variety of environments - from a Macbook to the cloud. While this post is focused on R, the steps I went through on layering the architecture can be used for many software solutions including JAVA, Python, Node.JS, etc. There are a few best practices, tools and approaches I have used in past projects to achieve this architecture:

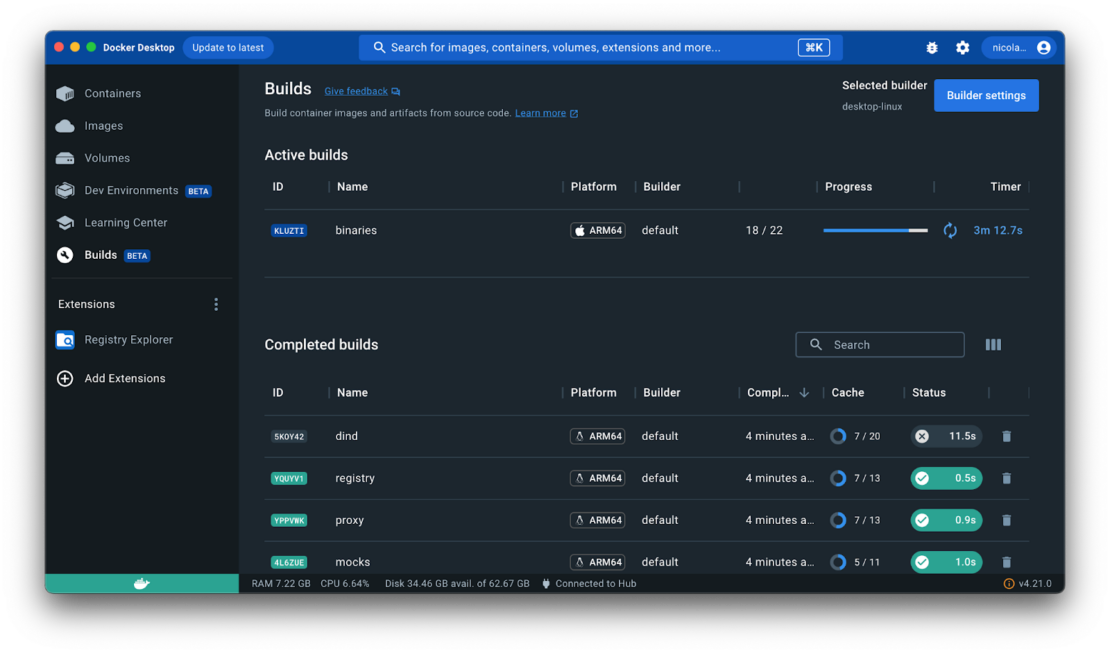

- Start locally: - In every project, start prototyping the containerized runtime on a local environment. Using

Docker Desktop is the best way to do this, allowing you to test images and run containers from these images. The Docker community has several resources to get you started and recommend starting here -

Play with Docker

Update the version of the Docker desktop regularly. I ran into a bug where running Docker desktop on Windows for an image based on Linux started failing when building the image. You can find more details on this Github issue and one of the Rocker community maintainers helped me resolve it.

- Leverage the community: Docker and R are open standards and as seen in my example above, the maintainers of these open source projects are invaluable resources that want to help.

- Integrate Docker with your codebase: Your application is a solution that involves many components - the code, Docker, the infrastructure, etc. From a DevOps point of view, Docker is the bridge between your code and the compute runtime. It’s important that developers see how the code will run in Docker, and infrastructure engineers need to see the code. The more that the developers and infrastructure engineers understand the total solution, the higher the release velocity.

- Optimize your images: Only install essential dependencies in your images. This keeps them small and highly optimized.

Conclusion

Dockerizing R workloads in AWS offers numerous benefits, including improved portability, consistency and scalability. In this post we learned how a layered Docker architecture can promote a flexible, efficient and maintainable environment for your R applications in AWS.

Next we will dive into AWS ECS Fargate and how it was used to run the containers we learned about in this post!