Introduction

In our last post, we discussed how we can use a layered architecture with Docker to simplify and reuse images in the deployment of R-based models. In this post, we will review how to run our Docker model image in AWS using the Elastic Container Service (ECS) with the Fargate deployment type.

By the end of this post, you’ll understand:

- What ECS Fargate is

- The core constructs in ECS, including Clusters, Services, and Task definitions

- The Elastic Container Registry (ECR) and how it’s used to store our images in the cloud

- How to build and deploy new images into ECS

What is AWS ECS Fargate?

Our challenge for this solution was to migrate our models to AWS and run them based on events or a schedule. Docker is established as the deployment unit for our model, and we reviewed how we can run it locally using Docker Desktop. This is fine for local development, but now we need to move this to the cloud.

Elastic Container Service is an AWS service that scales and runs containers in the cloud. Here are a few key points about this service:

- Fully managed: AWS handles the ECS backplane, and the service scales and adds compute instances as needed based on workload rules. As we will learn, ECS Fargate allows this same capability without any EC2 instances to scale and runs containers in a completely serverless manner.

- Deep AWS Integration: ECS integrates with many AWS services, including VPC, IAM, and CloudWatch. This simplifies security, load balancing, and overall infrastructure management.

- Deployment options: There are two launch types with ECS - EC2 and Fargate. The EC2 option allows for more control of instances, while Fargate is the serverless option that we will focus on in this post.

- Implementation Simplicity: ECS is a scalable yet simple container orchestration service. As we will review in this post, setting it up is fairly straightforward with constructs that can be managed as code in our GitHub repository.

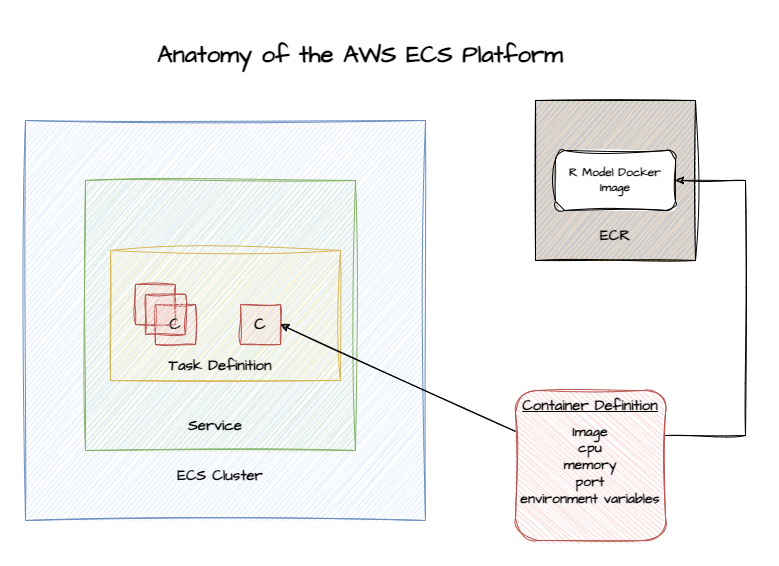

Anatomy of ECS Components

The AWS ECS platform has a few core components that are important to understand, as referenced in the diagram above:

- Elastic Container Registry (ECR): While ECR is a separate service, it is where our R model Docker image is ultimately stored.

- ECS Task Definition: With a Docker image in ECR, we create a task definition. The task definition describes the framework of your application in a JSON document.

- Container definition: When we create the task definition, we define the containers that actually comprise the application. The container definition describes details about each specific container.

- Service: The Service definition is the component that allows us to scale our solution. It provides levers like how many tasks need to run and will manage restarting instances of the application as needed.

- Task: A task is a running instance of that task definition. When you run a task, ECS takes the task definition and creates a running container or set of containers based on those specifications.

- Cluster: All of the above components run in a Cluster in the AWS ECS Service. It’s a logical grouping of the tasks and services for a given application.

Let’s take a look at some sections from a sample task definition below. I’ve reduced it to include sections for us to review. Please refer to the

ECS Task definition documentation for all required components of the JSON structure.

- Task resources: You might notice that there are CPU and memory settings at both the task definition level and the container definition level. The settings at the task definition level represent the total resources allocated for the entire task, while the settings for CPU and memory at the container level are for the specific container.

- Environment variables: Environment variables can be very useful as they allow us to inject variables into a running container, giving us flexibility in what happens inside the container. In this example, I am setting an

envvariable toPROD. When the R code runs inside the container, it can use that environment variable for things like secrets, bucket names, etc. - Log configuration: Use the

logConfigurationto set logging parameters. This will take the logs from your running container and send them to a logging platform. The example below is set toawslogs, which sends everything to CloudWatch using the specified log groups. ECS also supports other logging platforms like fluentd, gelf, json-file, journald, logentries, splunk, syslog, and awsfirelens. - Launch Type: The

requiresCompatibilitiesspecifies the launch type for the task. In this case, it’s set to Fargate. Other options include EC2 and External. Fargate is a fully managed serverless runtime for the task. EC2 uses a fleet of EC2 instances that run the Docker daemon process to run the containers. When running in EC2 mode, these instances are always up, utilizing resources and incurring costs. In our use case, we wanted the solution to only run when there was a model to run, so Fargate was a perfect choice.

{

"family": "my-task-definition",

"taskRoleArn": "arn:aws:iam::.....",

"executionRoleArn": "arn:aws:iam::.....",

"networkMode": "awsvpc",

"containerDefinitions": [

{

"name": "my-container",

"image": "",

"cpu": 4096,

"memory": 8192,

"links": [],

"environment": [

{

"name": "env",

"value": "PROD"

}

],

...

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/my-task-definition",

"awslogs-region": "us-east-2",

"awslogs-stream-prefix": "ecs"

}

},...

...

}...

],

"requiresCompatibilities": [

"FARGATE"

],

"cpu": 4096,

"memory": 8192,

"tags": [],

}

Best practices with AWS ECS

While ECS Fargate is a fairly straightforward container orchestration platform, there are some things to consider when using it to run your application.

- Container isolation and co-location: As we can see in the task definition above, the

containerDefinitionssection is a list, allowing for one or many containers. As you consider architecting the containers in your solution, consider how they will be managed, scaled, and deployed. In our use case for these R-based models, we had a 1-to-1 relationship between the task definition and container definition. All resources for the task were assigned to that one container. There are scenarios where co-locating containers in a single task makes sense:- Sidecar pattern: In this pattern, a main application container and a supporting container are paired together. For example, if each model had a logging container or some other telemetry-based service container, they could be in the same task and started together.

- Transformation pattern: If there was a component of the application that needed to provide some kind of mediation or transformation on behalf of the main container, they could be paired together.

- Co-located Services: While this might be more prevalent for POCs, there might be times where it makes sense to pair a web server and app server together as separate containers in the same task, allowing them to share the same local storage. In most production settings, these layers of the architecture should be scaled, managed, and deployed separately.

- Microservices with tight coupling: If there are fine-grained microservices that need to be paired together as they are closely related and will scale together.

- Logging best practices: ECS Fargate is a completely serverless solution. This means there are no simple ways to introspect the container and debug problems. Without the right logging in place, you will be flying blind when issues come up.

- IAM and Security: As seen in the task definition above, there are two important roles for the task - The

taskRoleArnandexecutionRoleArn:- Task Role: Think about the task role as an instance role for your application. It defines privileges that the container needs to access AWS services - these might be S3 buckets, an SQS queue, or a secret in Secrets Manager. It is always recommended to use the best practice of least privilege for the task, reducing the potential attack surface in the solution.

- Task Execution Role: The task execution role is like the task administration role for ECS. It enables the ECS backplane, coordinated by the ECS agent, to manage tasks. The activities that relate to this function include logging to CloudWatch or pulling container images from ECR.

- ECS Launch Type: We have focused on ECS in this article and specifically ECS Fargate. As we have learned, there are other launch types for the ECS service, including EC2 and External. Choosing between the different launch types depends on your use case. I have compiled a table below for some considerations:

Consideration |

EC2 | Fargate | External |

| Control over infrastructure | High | Low | Varies |

| Operational overhead | High. Under this launch type, instances need to be managed like any other instances in your environment | Low | Varies |

| Cost-effectiveness | Best if there is consistent utilization | Better for variable workloads | Depends on existing infrastructure |

| Scalability | Manual scaling, more setup required | Automatic scaling | Depends on external infrastructure |

| Resource optimization | Fine-grained control | AWS-managed | User-managed |

| Security | User-managed, potentially more secure | Isolated by design | Depends on external security measures |

| Compliance requirements | Easier to meet specific requirements | May have limitations | Depends on external environment |

| Performance customization | High (e.g., GPU instances) | Limited. As of 2024, GPU instances not available in Fargate | Depends on available hardware |

| Long-running tasks | Well-suited | Can be costly. In the case of the models for this use case, run-time was under 15 mins. If the model took hours, Fargate might not be optimal | Well-suited if resources available |

| Networking customization | High | Limited | Depends on external network |

| Storage options | More options including FSx and Docker native volumes | Less options - supports EBS, EFS and ephemeral storage | Depends on external storage |

Conclusion

AWS ECS is a comprehensive and powerful container orchestration platform. It supports the “bring your own container” model, where AWS handles the scaling and running of your containers for you. By leveraging ECS, particularly with Fargate, you can focus on developing your R models while AWS manages the underlying infrastructure. This approach can lead to more efficient deployments, easier scaling, and potentially lower operational overhead.

In our next post, we’ll cover the process of building a CI/CD pipeline using GitHub Actions to deploy new versions of our R model into ECR and update our task definition in a completely automated manner. This will demonstrate how to integrate ECS into a modern, efficient development workflow.

Stay tuned to learn how to streamline your R model deployment process even further!